Library AVM SDK simple.NET

Содержание

[убрать]- 1 Implementation of the algorithm AVM (Associative Video Memory) for C #

- 1.1 Approach

- 1.2 Algorithm features

- 1.3 What AVM algorithm will able give for us? How it can be useful for us?

- 1.4 Library organization (implementation)

- 1.5 Quick start

- 1.6 Description of methods

- 1.6.1 void Create (Size aKeyImgSize, short aLevelMax, int aTreeTotal, bool aClustering);

- 1.6.2 void Write (Rectangle aInterestArea, int apData, bool aDeepLearning);

- 1.6.3 bool Read (Rectangle aInterestArea, ref Rectangle apSearchArea, ref IntPtr appData, ref UInt64 apIndex, ref UInt64 apHitCounter, ref double apSimilarity, bool aTotalSearch);

- 1.6.4 Array ObjectRecognition ();

- 1.6.5 Array ObjectTracking (bool aTrainingDuringTracking, double aSimilarityUpTrd, double aSimilarityDnTrd);

- 1.6.6 void Destroy ();

- 1.6.7 void SetActiveTree (int aTreeIdx);

- 1.6.8 void ClearTreeData ();

- 1.6.9 bool Save (string aFileName, bool aSaveWithoutOptimization);

- 1.6.10 bool Load (string aFileName);

- 1.6.11 CvRcgData WritePackedData ();

- 1.6.12 void ReadPackedData (CvRcgData aRcgData);

- 1.6.13 void OptimizeAssociativeTree ();

- 1.6.14 void RestartTimeForOptimization ();

- 1.6.15 double EstimateOpportunityForTraining (Rectangle aInterestArea);

- 1.6.16 void SetImage (ref Bitmap apSrcImg);

- 1.6.17 int GetTotalABases ();

- 1.6.18 short GetTotalLevels ();

- 1.6.19 UInt64 GetCurIndex ();

- 1.6.20 UInt64 GetWrRdCounter ();

- 1.6.21 Size GetKeyImageSize ();

- 1.6.22 Size GetBaseKeySize ();

- 1.6.23 void SetParam (CvAM_ParamType aParam, double aValue);

Implementation of the algorithm AVM (Associative Video Memory) for C #

Approach

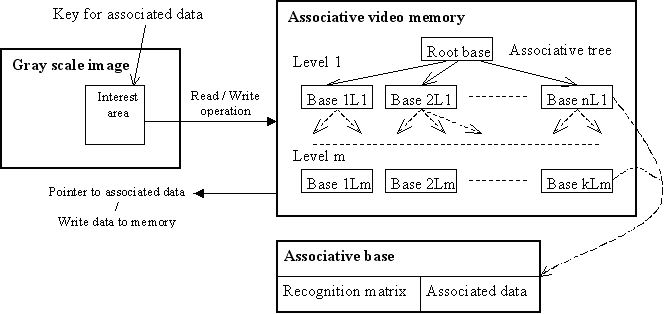

Algorithm "Associative Video Memory" (AVM) uses a principle of multilevel decomposition of recognition matrices. Inside AVM search tree is stored recognition matrices with associated data. On the upper levels of the tree there is more rough matrices with a small number of coefficients but at lower levels is stored more detailed matrices (larger number of coefficients). The matrices contain information about the location of brightness regions of objects represented in the invariant form (the coefficients of the matrices do not depend on the level of total illumination). Further scanning the image by window we get the input matrix and search it in the AVM tree. If the difference between the coefficients of the input matrix and stored in the AVM matrix by absolute value does not exceed a specified threshold then the object is recognized.

Each matrix in the AVM is associated with the user data. The AVM uses the interest area (image fragment) as a key for data access. From interest area further creates an input recognition matrix and then searches it for similar matrices in AVM tree.

Number of tree levels and dimension of the matrices depends on the key size of the image that will be defined by the user when creating new instance of the AVM (see method "Create").

Algorithm "Associative video memory" in detail: [1]

Algorithm features

Algorithm AVM is steady against noise of the camera and well scaled, simply and quickly for training, also it shows acceptable quick-action on a greater image resolution of entrance video (960x720 and more). The algorithm works with grayscale images.

What AVM algorithm will able give for us? How it can be useful for us?

The algorithm "Associative video memory" is commercial project but you can use AVM SDK for free in your non-commercial projects.

- You can use AVM algorithm in your researches for development of efficient navigation solution for robotics (as recognizer). You can test your hypothesis concerning robot navigation based on landmark beacons with AVM. And if successful navigation solution will be achieved then you will have two ways: you can develop your own pattern recognition algorithm and then replace AVM algorithm in your finished (commercial) project, or you can use commercial version of AVM algorithm in your finished project.

- Also AVM could be used in testing of your own recognition algorithm in development process.

Library organization (implementation)

The package "AVM SDK simple.NET" consist of two dynamic libraries:

* Avm061.dll – algorithm AVM implementation; * AVM_api.dll – wrapper for C# interface.

Source files of interface library AVM_api.dll located in the folder: .\AVM_SDK_simple.net\AVM_api\AVM_api.csproj

For connection of algorithm AVM to your project is needed an including of libraries avm061.dll and AVM_api.dll. Algorithm AVM use an image in Bitmap format as input data, and after processing it provide object description array on output, if object(s) was recognized.

Package AVM SDK simple.NET can be downloaded here: [2]

Quick start

What you need for training?

1. Create an instance of AVM: _am = new CvAssociativeMemory32S (); 2. Initialize the instance (methods: Create, Load, or ReadPackedData): _am.Load (cRcgDataFileName); 3. Set the image for processing: _am.SetImage (ref aSrcImage); 4. Write the new image to the AVM search tree: _am.Write (InterestArea, ObjIndex, true).

What you need for recognition?

1. Perform steps 1-3 described above; 2. Read an array of object descriptors (methods: ObjectTracking/ObjectRecognition): Array SeqObjDsr = _am.ObjectTracking(true, 0.54, 0.45); 3. If the array is not empty (the objects found), then process an array of object descriptors of the recognized objects in a loop.

More information can be found in the AVM directory examples: .\AVM_SDK_simple.net\samples

Description of methods

void Create (Size aKeyImgSize, short aLevelMax, int aTreeTotal, bool aClustering);

Method "Create" initializes an instance of AVM.

aKeyImgSize - a key size of the image. In most cases the optimal value of 80x80 pixels.

aLevelMax - the maximal number of search tree levels. If the parameter is zero,

then it will be set to the optimum value by default.

aTreeTotal - the total number of the search trees (when working with collections of trees).

The default is 1 (one search tree).

aClustering - a sign of additional cluster matrices in the search tree (for a faster search).

By default is enabled (true).

void Write (Rectangle aInterestArea, int apData, bool aDeepLearning);

The user data (second parameter) binds with an image fragment (first parameter) by method “Write”. In case of type CvAssociativeMemory32S it is an index of the object. And then these data can be read using the “Read” method. If you scan a picture with different window sizes by "Read" method you can find the object(s). So method ObjectTracking/ObjectRecognition does searching in a similar way.

aInterestArea – interest area of image fragment which is containing an object;

apData - user data;

aDeepLearning - a sign of "depth" of training (additional scanning in the vicinity of the object).

Default is enabled (true).

bool Read (Rectangle aInterestArea, ref Rectangle apSearchArea, ref IntPtr appData, ref UInt64 apIndex, ref UInt64 apHitCounter, ref double apSimilarity, bool aTotalSearch);

Reading data from memory (the third parameter), which was associated with a fragment of the image (the first parameter).

aInterestArea - interest area of image fragment;

apSearchArea - rectangle where the object was found;

appData - pointer to the user data;

apIndex - index of the associative base (where data was read);

apHitCounter - a hit counter of the associative base;

apSimilarity - similarity rate with the model (if similarity greater than 0.5 then the object was recognized);

aTotalSearch - a sign of "total" search to the latest level of the search tree (it will find the most similar

associative base in the search tree, even if recognition did not happen).

The method returns true if the object is found in interest area.

Array ObjectRecognition ();

Scans the image using the "Read" method and returns a descriptor array of recognized objects.

Array ObjectTracking (bool aTrainingDuringTracking, double aSimilarityUpTrd, double aSimilarityDnTrd);

Performs an objects search and tracking. Returns a descriptor array of tracked objects.

aTrainingDuringTracking - a sign of additional training in the process of object tracking.

Default is enabled (true).

aSimilarityUpTrd - the upper threshold of similarity below which will be performed

additional training on the tracked object (default is 0.54).

aSimilarityDnTrd - the lower threshold of similarity above which can be still performed

additional training on the tracked object (the default is 0.45).

The structure of the object descriptor CvObjDsr32S:

State – state of recognition function; ObjRect – an image area where object was found; Similarity - similarity rate with the model (if similarity greater than 0.5 then the object was recognized); Data – user data associated with the object; Trj - the trajectory of object (an array of 100 points).

State:

cRecognizedObject - recognized an image fragment; cGeneralizedObject - it was calculated some generalized rectangle of an object (the method "ObjectRecognition"); cTrackedObject - calculated rectangle of tracked object (method "ObjectTracking"); cLearnThisObject - indication of additional training.

void Destroy ();

Completely frees all the memory structures that were involved in the AVM. After this action the instance of AVM cannot continue to work and needed to initialize an instance with one of the methods: Create, Load, or ReadPackedData.

void SetActiveTree (int aTreeIdx);

Sets (toggles) the current search tree from the collection of trees (see “Create” the third parameter).

aTreeIdx - index of the search tree (from zero).

void ClearTreeData ();

Search tree is reset after calling "ClearTreeData", but the data structures that were created by the method "Create" remain, and AVM can continue training by method "Write".

bool Save (string aFileName, bool aSaveWithoutOptimization);

Saving of recognition data to the file.

aFileName - filename; aSaveWithoutOptimization - a sign of optimization of the search tree before saving. The default is enabled (true).

If the saving of recognition data is successful then returns true.

bool Load (string aFileName);

Loading of recognition data from file.

aFileName - filename;

If the loading of recognition data is successful then returns true.

CvRcgData WritePackedData ();

Writes of recognition data as an array of bytes and further the data can be placed in a custom structure (together with other user data) and saved in a file.

void ReadPackedData (CvRcgData aRcgData);

Reads recognition data from the byte array that was formed by method "WritePackedData".

void OptimizeAssociativeTree ();

Optimizes the search tree by removing some associative bases in which the number of hits is less than the defined threshold.

void RestartTimeForOptimization ();

Resets timer that counts the time to the next optimization of search tree.

double EstimateOpportunityForTraining (Rectangle aInterestArea);

Returns the coefficient that indicates the opportunity for training to the interest area (aInterestArea). If the method returns a value less than 0.015 then the interest area cannot effectively be recognized after training.

void SetImage (ref Bitmap apSrcImg);

Sets the image apSrcImg for further processing (training/recognition).

int GetTotalABases ();

Returns the total number of associative bases in the search tree.

short GetTotalLevels ();

Returns the total number of levels in the search tree.

UInt64 GetCurIndex ();

Returns the current index of the latest associative base that was added to the search tree.

UInt64 GetWrRdCounter ();

Get read/write loop counter.

Size GetKeyImageSize ();

Returns the key size that was set at initialization method "Create".

Size GetBaseKeySize ();

Returns the base key size (40, 80, 160, ... 2 ^ n * 10).

void SetParam (CvAM_ParamType aParam, double aValue);

Sets the value (aValue) of parameter (aParam) for instance of AVM.

So, options of parameters by default is set optimally for the majority of cases but if you want to experiment (play with settings) then welcome:

Parameter "ptRcgMxCellBase" sets base value that is used in the calculation of the recognition threshold (default is 20000). If this value will be increased then the number of positive recognition (it should be recognized and it has recognized) will increase too. But it cause also growing of number of false negative recognition (it should not be recognized but it has recognized).

Optimization of AVM search tree take place with a defined (by user) frequency and the bases that appeared in the learning process but do not get defined number of hits will be removed from the search tree in the optimization process. Optimization also can be initiated directly by calling method "OptimizeAssociativeTree". But you can change the period of optimization with "ptOptimizeLoop" parameter. Age (number of hits) of formed associative bases can be assigned by "ptMaxClusterAge" parameter. You can get rid of (sift) extra "noise" associative bases with increasing of optimization frequency and manipulating by age of formed bases in the learning process.

The parameter "ptTrackingDepth" is the threshold value of the frame number in which the object was not found and then tracking will be stopped. The default is 40 frames (about 1.5 seconds). It is useful to reduce this value if you try to make robot control in real-time. For example if an object disappear in the frame and there has no object last one and a half seconds but robot continues to level up at him and accordingly controlling is not correct. I use SetParam(ptTrackingDepth, 2) in my experiments of the robot control.